Which is better Redux-Thunk Or Redux-Saga

November 23, 2021

Power BI : Recent Features: (2021)

May 12, 2022Kubernetes

commonly stylized as K8s(no. Of characters between K and S is 8) is an open-source container–orchestration system for automating computer application deployment, scaling, and management. It works with a range of container tools and runs containers in a cluster, often with images built using Docker.

History

Kubernetes v1.0 was released on July 21, 2015. Up to v1.18, Kubernetes followed an N-2 support policy (meaning that the 3 most recent minor versions receive security and bug fixes). From v1.19 onwards, Kubernetes will follow an N-3 support policy. Current stable version is Kubernetes 1.22, it’s the second release of 2021!

Cluster Objects

Kubernetes follows the primary/replica architecture. The components of Kubernetes can be divided into those that manage an individual node and those that are part of the control plane.

Control plane

The Kubernetes master is the main controlling unit of the cluster, managing its workload and directing communication across the system. The Kubernetes control plane consists of various components, each has its own process, that can run both on a single master node or on multiple masters supporting high availability clusters.

- etcd: etcd is a persistent, lightweight, distributed, key-value data store developed by CoreOS that reliably stores the configuration data of the cluster, representing the overall state of the cluster at any given point of time.

- API server: The API server is a key component and serves the Kubernetes API using JSON over HTTP, which provides both the internal and external interface to Kubernetes

Nodes

A Node, also known as a Worker or a Minion, is a machine where containers (workloads) are deployed. Every node in the cluster must run a container runtime such as Docker, as well as the below-mentioned components, for communication with the primary for network configuration of these containers.

- Kubelet: Kubelet is responsible for the running state of each node, ensuring that all containers on the node are healthy. It takes care of starting, stopping, and maintaining application containers organized into pods as directed by the control plane.

- Kube-proxy: The Kube-proxy is an implementation of a network proxy and a load balancer, It is responsible for routing traffic to the appropriate container based on IP and port number of the incoming request.

Namespaces

Kubernetes provides a partitioning of the resources. It manages non-overlapping sets called namespaces.

DaemonSets

Normally, the locations where pods are run are determined by the algorithm implemented in the Kubernetes Scheduler. For some use cases, though, there could be a need to run a pod on every single node in the cluster. This is useful for use cases like log collection, ingress controllers, and storage services. The ability to do this kind of pod scheduling is implemented by the feature called DaemonSets.

Workload Objects

- Pods

A pod is a grouping of containerized components. A pod consists of one or more containers that are guaranteed to be co-located on the same node.

- ReplicaSets

A ReplicaSet’s purpose is to maintain a stable set of replica Pods running at any given time. As such, it is often used to guarantee the availability of a specified number of identical Pods.

Services

A Kubernetes service is a set of pods that work together, such as one tier of a multi-tier application.

Volumes

restart of the pod will wipe out any data on such containers, and therefore, this form of storage is quite limiting in anything but trivial applications. A Kubernetes Volume provides persistent storage that exists for the lifetime of the pod itself.

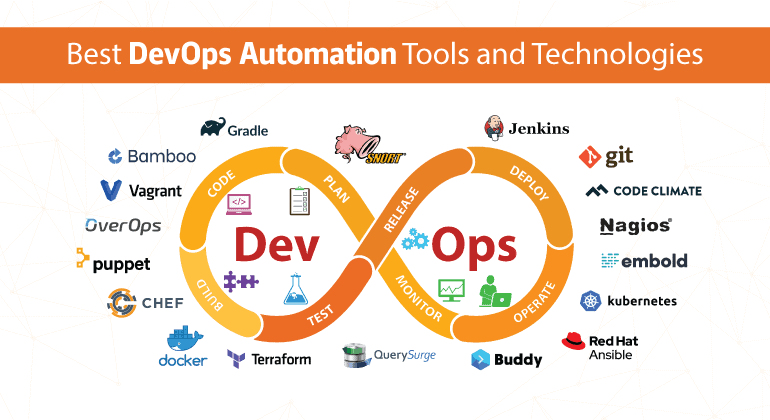

Kubernetes Trends

The adoption of DevOps practices on Kubernetes platforms is relatively more mature than the associated SDLC. However, with emerging patterns like GitOps, this is also an anticipated area of growth as well.

The software development lifecycle (SDLC) on Kubernetes and microservices-based applications are still evolving and this is where there might be significant evolution in the next few years.

The lead headline in the recent Cloud Native Computing (CNCF) survey states that the “use of containers in production has increased by 300% since 2016.” With this hyper-growth there comes challenges that users are grappling with, not only individually but also as a community.

In this data-driven era, cloud-native frameworks have taken the business world by storm.

Kubernetes is one such framework that’s making it big in the cloud computing world. As several businesses are rapidly shifting to this container management tool, many are still figuring out if they need to use Kubernetes or not. The decision isn’t exactly straightforward, and one needs to carefully sit down and review their business needs to ascertain whether they should use Kubernetes or not.

Kubernetes is essentially a container orchestrator and thus requires a container runtime. This is why Kubernetes only works with containerized applications such as Docker.

Often, a single heterogeneous environment requires more than just one cloud computing service to execute a microservice effectively. Using multiple cloud computing services or cloud storage solutions in a particular heterogeneous environment is called multi cloud.

Multi Cloud has enabled the development, deployment, and management of numerous microservices. Multi Cloud also helps businesses reduce dependency on one vendor, lower latency, manage costs at affordable rates, local data protection law adherence, boosting choice flexibility, and more.

If you are dealing with such multi cloud architectures and trying to understand when to use Kubernetes, the answer is “right away”! Hosting workloads in Kubernetes enables you to use the same code on every cloud that’s in your multi cloud architecture.

A hybrid cloud is different from a multi cloud infrastructure. When a business deploys more than one computing or storage solution of the same type, it is referred to as a multi cloud infrastructure. However, the deployment of multiple computing or storage solutions of different types is called a hybrid cloud infrastructure.

For example, if a business uses three private cloud environments fulfilled by three different vendors (and no other cloud environments), we would say that the company utilizes a multi cloud infrastructure. On the other hand, if a business uses three private cloud environments and two public cloud environments, we would say that they make use of hybrid cloud infrastructure.

Now, implementing a hybrid cloud is not exactly a cakewalk. Kubernetes can help developers with this challenge and enable them to deploy and manage better hybrid cloud infrastructures.

Kubernetes Latest Stable Version

Latest release of Kubernetes is 1.22, it is the second release of 2021!

This release consists of 53 enhancements: 13 enhancements have graduated to stable, 24 enhancements are moving to beta, and 16 enhancements are entering alpha. Also, three features have been deprecated.

Major Changes

- Removal of several deprecated beta APIs

- API changes and improvements for ephemeral containers(A special type of container that runs temporarily in an existing Pod to accomplish user-initiated actions such as troubleshooting).

- As an alpha feature, all Kubernetes node components (including the kubelet, kube-proxy, and container runtime) can be run as a non root user.