KNIME Analytics Platform: Machine Learning Made Easy

May 26, 2021

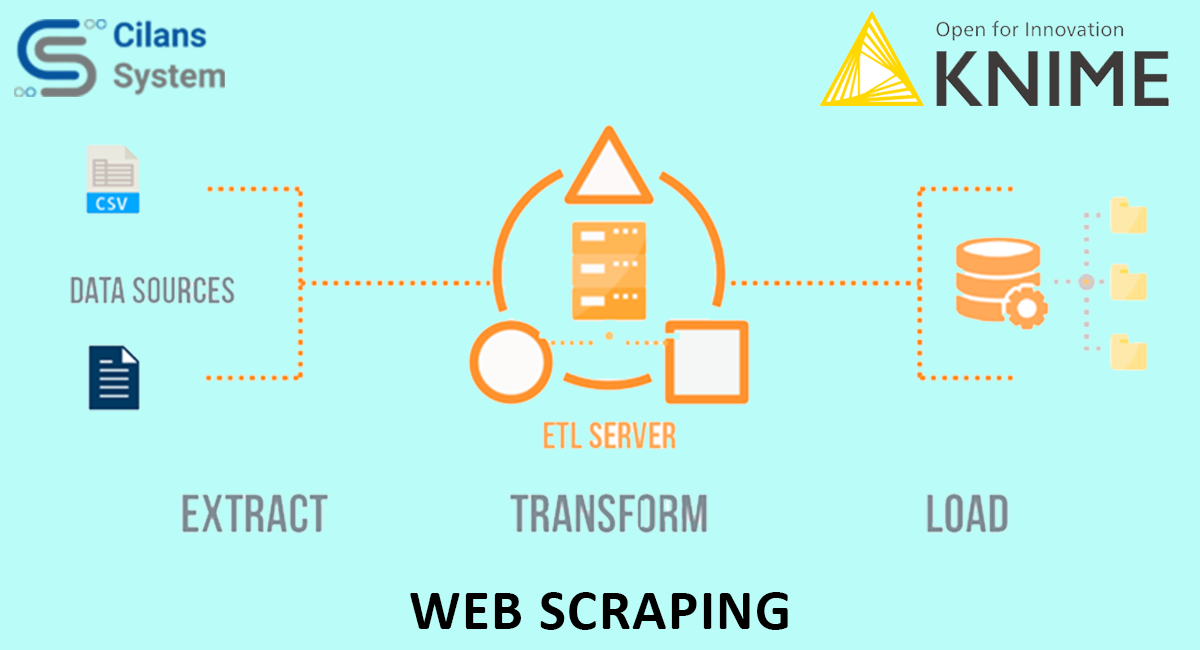

Web Scraping with KNIME

May 27, 2021Introduction

Data Science is abounding. It considers different realms of the data world including its preparation, cleaning, modeling, predicting, and much more. If you are new to Knime, kindly read the blog “Beginner’s guide for KNIME: What, Why, How” and also understand “Basic building blocks of KNIME for Retail analytics”. The retail sector is ever-growing and one such example can be BigMart which is a Retail Chain in the USA. The aim of this article is to build a predictive model and find out the sales of each product at a particular story without writing a piece of code! You can download the dataset and its description from here. Also, you can import various Workflows, Datasets, Nodes, Components, and much more from KNIME Hub.

Objective

In this data, we are going to build a regression model to predict sales based on the different features of BigMart. As we are going step by step first and the most important thing is data cleaning. After that, we are going to visualize our data for basic analysis. By following the below steps, in the end, you are making the full workflow.

1. Importing the data files

Let us start with the first, yet very important step in understanding the problem – importing our data.

Drag and drop the “File Reader Node” to the workflow and double click on it. Next, browse the file you need to import into your workflow.

In this article, as we will Predicting Sales of the BigMart Retail Chain, hence we will import the dataset of BigMart Sales.

2. How do you clean your Data?

GIGO (Garbage In Garbage Out) is generally true for any Data Science projects. Results are as good as data.

Before training your model, we need to perform Data Cleaning and Feature Extraction. You can impute missing values using “Missing value Node” but KNIME also provides the facility of Interactive Data Cleaning.

2.1 Finding Missing Values & Imputations

Before we impute values, we need to know which ones are missing.

Go to the node repository again, and find the node “Missing Values”. Drag and drop it, and connect the output of our File Reader to the node.

When we execute it, our complete dataset with imputed values is ready in the output port of the node “Missing Value”

Here, in the categorical variable (outlet_size) missing value we cannot impute it randomly so using the crosstab technique first we find the relatively appropriate value, which we can impute.

3. Machine Learning modeling in KNIME

Let us take a look at how we would build a machine learning model in KNIME. After data cleaning, pre-processing and feature engineering we know that for modeling, first, we partition our data. Go to Node repository and import “Partitioning Node”.

3.1 Partitioning

In the configure of Partitioning Node, we have to specify the size of the first partition, click OK and execute the Node. You can choose from a variety of Partitioning techniques.

3.2 Implementing a Random Forest Model

Go to the Node repository again, and find the “Random Forest Learner Node” and “Random Forest Predictor Node”. Drag and drop it, and connect the output of our Partitioning Node as below.

In Random forest learner configuration, we have to specify our target column as item_out_sales, click OK and execute the Node.

In Random Forest Predictor configuration, you can change the Prediction column name, press OK and execute the Node to see the Prediction, right-click on the Node, and select prediction output.

3.3 Model Evaluation

Go to the Node repository and drag and drop “Numeric Scorer Node” and Also you can use different techniques for model evaluation you can find different Node in the Node repository.

By right-clicking on Numeric Scorer and selecting Statistics you can find the values of R² and errors. This is the final workflow diagram that was obtained.

Observations

In this exercise, we only did the analysis using a random forest algorithm. In theory, we would use different models and feature engineering techniques to derive the most optional approach. Here, our objective is just to cover how KNIME can be used to solve a typical ML problem.

Using the R² measure we can validate our model. For example, an r-squared of 50% reveals that 50% of the data fit the regression model. Generally, a higher r-squared indicates a better fit for the model.

Authored by:

Team Cilans: Nikhil, Chintan, Kashyap

For additional articles on the Knime Blog series, visit www.cilans.net

Contact us at info@cilans.net for more!